#Local private cache write through plus#

Nous introduisons également trois modèles de performances dont deux sont sensibles à la localité : un premier modèle qui ne prend en compte que les temps d’exécution des tâches, un modèle léger qui utilise des informations de topologie pour pondérer les transferts de données, et enfin un modèle plus complexe qui prend en compte le stockage de données dans le LLC (Last Level Cache, i.e. Celui-ci s’appuie sur l’enregistrement d’une trace de l’exécution séquentielle de l’application cible, en utilisant l’interface standard de trace pour OpenMP, OMPT (OpenMP Trace). Nous présentons donc dans cette thèse un nouveau simulateur d’applications parallèles à base de tâches dépendantes, qui permet d’expérimenter plusieurs modèles de localité des données.

Nous modélisons différentes architectures à mémoire partagée en effectuant nos propres mesures pour en obtenir les caractéristiques. calcul haute performance) présentent aujourd’hui de tels effets.

Cependant, une capacité critique manquante à ces travaux est la capacité à prendre en compte les effets d’accès non uniforme à la mémoire (NUMA, Non-Uniform Memory Access), même si pratiquement toutes les plateformes HPC (High Performance Computing, i.e. Au niveau des noeuds, certains outils ont également été proposés pour simuler des applications parallèles basées sur des tâches. De nombreux frameworks ont été conçus pour simuler de grandes infrastructures informatiques distribuées et les applications qui y sont exécutées. The proposed system (Region-Chunk+PSS) further enhances the normalized average cache capacity by 2.7% (geometric mean), while featuring short decompression latency.Īnticiper le comportement des applications, étudier et concevoir des algorithmes sont quelques-uns des objectifs les plus importants pour les études de performances et de correction des simulations et des applications liées au calcul intensif. PSS can be applied orthogonally to compaction methods at no extra latency penalty, and with a cost-effective metadata overhead. In particular, a single-cycle-decompression compressor is boosted to reach a compressibility level competitive to state-of-the-art proposals.Finally, to increase the probability of successfully co-allocating compressed lines, Pairwise Space Sharing (PSS) is proposed. This concept can be applied to several previously proposed compressors, resulting in a reduction of their average compressed size. The key observation is that by further sub-dividing the chunks of data being compressed one can reduce data duplication. Its goal is to achieve low (good) compression ratio and fast decompression latency. This work explores their granularity to redefine their perspectives and improve their efficiency, through a concept called Region-Chunk compression.

An in-depth description of the main characteristics of multiple methods is provided, as well as criteria that can be used as a basis for the assessment of such schemes.Typically, these schemes are not very efficient, and those that do compress well decompress slowly. The goal of this analysis is not to summarize proposals, but to put in evidence the solutions they employ to handle those challenges. This study unveils the challenges of adopting compression in memory design. They must, however, comply with area, power and latency constraints.

#Local private cache write through software#

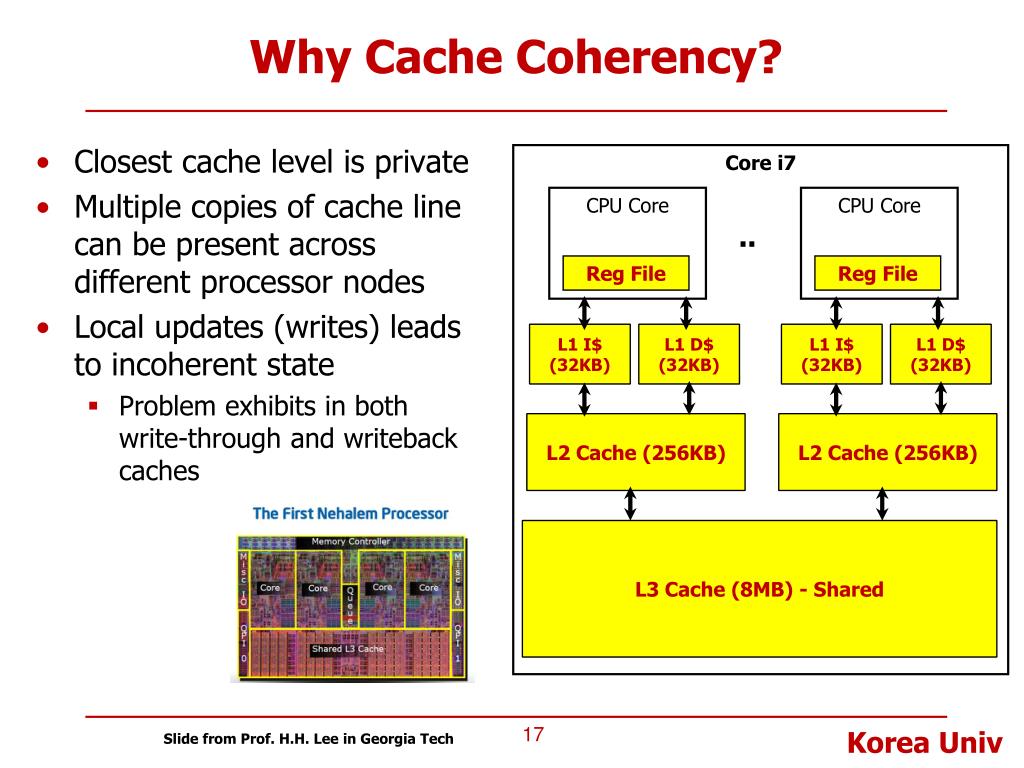

Hardware compression techniques are typically simplifications of software compression methods. Cache coherence protocols generally consist of a subset of the following states: Modified, Shared, Invalid, Exclusive, Owned and Forward. Coherence controllers can also rely on the use of directories (distributed, centralized or in hierarchies) to keep track of the blocks' copies without the constant need to broadcast data, as it can easily saturate system traffic. Some coherence controllers work by having state machines that snoop on bus transactions, taking the appropriate action according to the cache line's state and protocol being used. It is a common practice to make the first cache level private to keep it at a low latency, have the intermediate cache levels be shared among some cores (e.g., per pairs or tetrads of cores), and the last level cache be shared amongst all cores.Unfortunately, making all cache levels shared by all cores would incur a gargantuan wiring mess, and in a adverse disturbance of the access latencies, so we are forced to use private caches and use controllers to cope with their drawbacks.

0 kommentar(er)

0 kommentar(er)